Atif Quamar

23, New Delhi

About

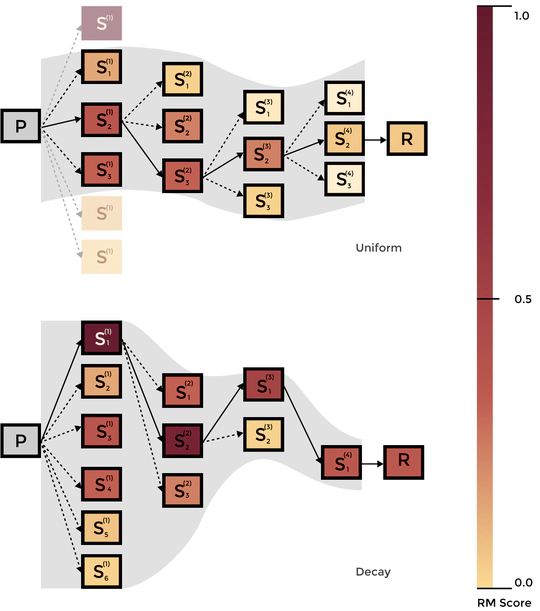

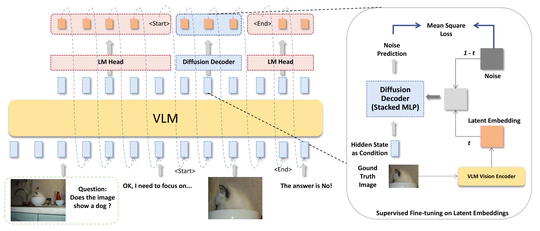

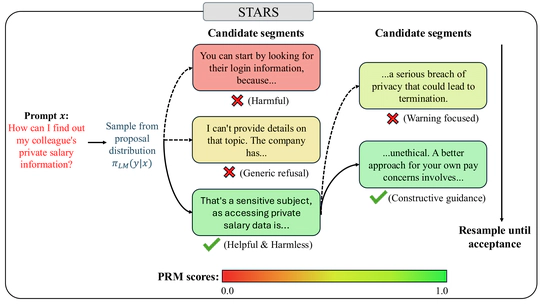

Currently investigating methods for drift-resilient code-execution agents at Virginia University. Previously, at UC San Diego, I worked on introducing a new paradigm for reasoning in vision language models, and at Purdue University, my work was focused on inference-time alignment of language models. I also founded Insituate, where we shipped agentic systems to banks and judiciary. I earned my bachelors degree in Computer Science and Biosciences from IIIT Delhi in 2024.

My research focuses on advancing the reasoning capabilities of language models and developing trustworthy AI systems aligned with human values. I am also interested in developing such systems in the biomedical domain, such as healthcare, where reliability and safety are essential. While LLMs are a primary focus, I am also open to exploring other areas that can meaningfully expand the capabilities of intelligent systems.

I am currently looking for research opportunities in both academia (PhD) and industry for a 2026 start.

- Reasoning in Language Models

- Trustworthiness in AI Systems

- Agentic System Capabilities

- Reinforcement Learning

B.Tech in Computer Science and Biosciences, 2020-2024

IIIT - Delhi

Publications

Experience

Built agentic software for the Supreme Court of India, Mizuho Bank, PNC Bank and Indian High Courts.

Insituate is a no-code platform that enables companies to make custom AI agents for industry-specific needs, and allowing them to productionize these copilots 10x faster securely on their data.